Minors and social networks: a code of conduct

Update: AFNOR Spec 2305 was published on November 23, 2023, click here to find out more.

Whether you’re young or old, you’ll all remember this unprecedented measure from TikTok, the increasingly popular social network for young people, in March 2023: the Chinese micro-video platform will introduce a warning after sixty minutes of use, inviting its users who have declared they are under 18 to enter a password. This measure follows repeated warnings from psychologists, educators, legal experts and other specialists about the abuse and damage that frenetic use of social networks can cause minors. The E-Enfance association lists 30,000 calls a year to the 3018 number, 4 out of 10 of which are initiated by minors. But TikTok’s measure is easy to circumvent: users can deactivate the notification or lie about their age. In order to protect minors, one of the challenges is to find an alternative to self-declaration, while respecting individual freedom and personal data.

Consensus on best practices

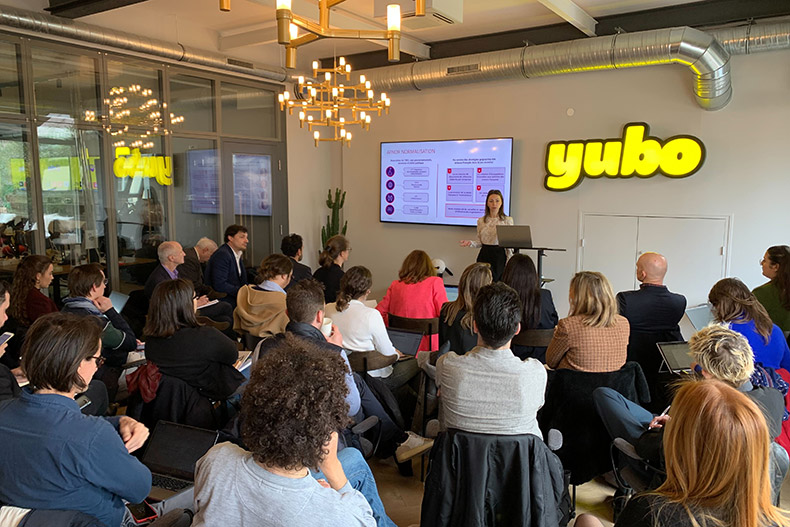

This is one of the subjects addressed in an AFNOR guide entitled “Prévention des risques et protection des mineurs sur les réseaux sociaux” (Risk prevention and protection of minors on social networks). This pre-normative work, whose publication target is the fourth quarter of 2023 in AFNOR Spec format (french only), was launched on Tuesday March 21, 2023 at the Yubo platform in Paris, which is aimed precisely at 13-25 year-olds. Despite the adoption of the DSA (Digital Services Act) at European level, ” the law, regulation and regulatory bodies have everything to gain by getting the players around the table to agree, consensually, on best practices “, explains Julie Latawiec, who is coordinating the project for AFNOR. Platforms such as Meta, which has made a major financial effort to use artificial intelligence to detect accounts and illicit content, and regulatory bodies such as Cnil and Arcom are already on board. In the longer term, the document could be proposed to European (CEN) and international (ISO) standardization bodies as a voluntary standard. The first international standard (IEEE 2089) has already been adopted, and will be set out in a European prenormative agreement in 2021.

The AFNOR Spec 2302 project focuses on three main areas:

User assurance and confidence

- identity verification

- fight against anonymity

- parental consent

- age verification and estimation

- creating fake accounts

Content detection and moderation

- inappropriate content

- automated detection (AI)

- automated and human moderation

- personal data protection

Prevention and education

- awareness

- information

- transparency

- education

- support